Scraping Data using Selenium | Web Scraping | Python

- Hackers Realm

- Oct 13, 2023

- 4 min read

Selenium is an open-source browser automation tool that allows you to interact with websites just like a human user would. Unlike other scraping techniques that rely solely on parsing HTML content, Selenium can navigate through web pages, click buttons, submit forms, and handle dynamic content, making it particularly useful for scraping data from websites with complex structures or those that require user interactions.

In this tutorial, we will explore the fundamentals of web scraping using Selenium. We will cover the setup of Selenium, demonstrate basic web interactions, and provide examples of how to scrape data from websites.

You can watch the video-based tutorial with a step-by-step explanation down below.

Import Modules

from selenium import webdriver

from selenium.webdriver.common.by import By

import pandas as pdwebdriver - software component or library that is used to automate interactions with web browsers.

selenium.webdriver.common.by - provides a set of locator strategies to find and interact with web elements on a web page.

pandas - provides easy-to-use data structures and functions for working with structured data, primarily in the form of tabular data.

Set path for Chrome Driver

We will set the path of our chrome driver exe file.

path = 'C:\\Chromedriver.exe'Give the path of your file. My chrome driver file is on the C drive.

Next create the browser.

browser = webdriver.Chrome(executable_path = path)We are accessing the chrome browser in this tutorial. You can access different browsers as per your requirement.

This is a fundamental step when using Selenium for web automation, testing, or web scraping with Google Chrome.

Chrome() is a constructor for creating a WebDriver instance that controls the Google Chrome browser. When you call webdriver.Chrome(), it initializes a new Chrome browser window or tab that can be controlled programmatically using Selenium.

Next let us define a url from where we will scrap our data.

Next we will open the url page from browser that we have created.

# open the page url in chrome

browser.get(url)We use browser.get(url) to navigate to the specified URL.

After opening the URL, you can proceed to interact with the web page, extract data, or perform other tasks using Selenium.

Scrap the data

In this tutorial we will scrap the country details from the website. First we will get the country names.

# get country names

country_list = browser.find_elements(By.XPATH, "//h3[@class='country-name']")browser.find_elements() find all elements that match the specified XPath expression. This will give us a list of web elements.

You can replace the XPath expression with the appropriate one that matches the structure of the web page you are working with.

Next we will parse the data.

# parse the data

countries = []

for country in country_list:

# get the text data

temp = country.text

countries.append(temp)You loop through each element in the country_list, assuming that country_list contains the elements you want to extract text from.

For each country, you use country.text to extract the text data (country name in this case) from the element.

You append the extracted country name to the countries list.

After running this code snippet, the countries list will contain all the country names that were extracted from the country list elements.

Next let us get the population of the country.

# # get the population for the country

population_list=browser.find_elements(By.CLASS_NAME,'country-population')We use browser.find_elements(By.CLASS_NAME, 'country-population') to find all elements with the specified class name ('country-population'). This will give us a list of web elements.

This code snippet will help you extract and display the population data found on the web page for each country.

Next let us parse the population data.

# parse the data

populations = []

for population in population_list:

# get the text data

temp = population.text

populations.append(temp)You loop through each element in the population_list, assuming that population_list contains the elements you want to extract text from (e.g., population numbers).

For each population_element, you use population_element.text to extract the text data (population number) from the element.

You append the extracted population_data to the populations list.

After running this code snippet, the populations list will contain all the population data that was extracted from the population_list elements.

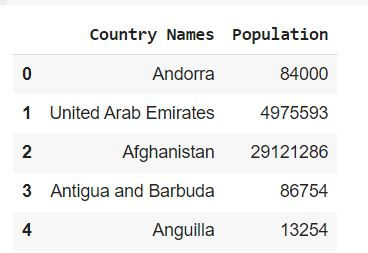

Store the Scraped Data

Next we will store the data in a structured format.

data = pd.DataFrame()

data['Country Names'] = countries

data['Population'] = populations

data.head()

We create an empty DataFrame using pd.DataFrame().

We add two columns to the DataFrame: 'Country Names' and 'Population', and populate them with the data from the countries and populations lists, respectively.

Finally, we use data.head() to display the first few rows of the DataFrame. This will give you a preview of the data in tabular form.

Next let us save the data to an excel file.

# save the data

data.to_excel('countries.xls', index=False)data.to_excel('countries.xls', index=False) is used to save the data DataFrame to an Excel file named "countries.xls".

The index=False argument specifies that the DataFrame index (row numbers) should not be included in the Excel file. If you don't want to omit the index, you can remove this argument or set it to True (the default behavior).

After running this code snippet, you'll have an Excel file named "countries.xls" in the same directory as your Python script or notebook, containing the data from the data DataFrame.

Close the Driver

Next we will close the browser that we created at the start of the tutorial.

browser.quit()browser.quit() is called to close the browser window or tab and release any resources associated with it.

It's good practice to include browser.quit() at the end of your Selenium script to clean up after your automation tasks, ensuring that the browser doesn't remain open unnecessarily. This helps in preventing memory leaks and ensuring that your script doesn't interfere with subsequent browser sessions or other processes.

Final Thoughts

Selenium is capable of handling websites with dynamic content generated by JavaScript. This is particularly useful for scraping data from modern web applications.

Selenium provides a robust solution for web scraping, as it can handle complex and interactive websites, login pages, and CAPTCHA challenges.

You can use Selenium with various browsers like Chrome, Firefox, Edge, and Safari, ensuring that your web scraping works consistently across different platforms.

Selenium-based web scraping is generally slower than purely HTTP-based scraping techniques like using libraries such as requests and Beautiful Soup. This is because Selenium needs to load the entire web page and execute JavaScript.

In conclusion, Selenium is a powerful tool for web scraping, especially when dealing with complex, dynamic websites. However, it's essential to weigh the advantages against the considerations and to choose the right tool for your specific scraping needs.

Get the project notebook from here

Thanks for reading the article!!!

Check out more project videos from the YouTube channel Hackers Realm

Comments